Honestly, it feels like every week there's a new, more powerful AI model hitting the headlines, and that's awesome. But there's a quiet, massive problem brewing behind the scenes of all this innovation: the sheer scale of energy and data consumption. The AI boom, especially in generative models, is putting an unprecedented strain on our Earth-based infrastructure.

The AI Energy Crisis on Earth

The problem isn't just that AI uses electricity; it's the exponential growth. Training a single massive language model can consume enough electricity to power entire small towns for a year, and that’s before you even get to running it for millions of users (a process called inference).

Here's the one-two punch that’s hitting terrestrial data centers:

- Power Draw: Global data center energy demand is already comparable to entire countries. With AI workloads—which are intensely data-heavy and compute-hungry, this demand is projected to soar, putting severe pressure on local power grids and, frankly, increasing our reliance on fossil fuels in many regions.

- The Cooling Tax: A huge chunk of that electricity, sometimes up to one-third, is dedicated just to cooling the servers. Those powerful GPUs that crunch AI numbers generate immense heat. On Earth, we rely on expensive, water-intensive cooling systems (like giant chillers) to keep the chips from melting. This is a massive cost and an environmental headache.

We're trying to build faster, cleaner data centers, but the physical constraints of Earth are becoming a serious bottleneck.

Starcloud’s Radical Solution: The Orbital Cloud

Enter Starcloud, a company with a truly transformative, almost sci-fi approach: why not build the data centers in space?

This isn't just a fun stunt; it's a revolutionary way to hack the physics of the problem, offering two key advantages that are impossible to match on the ground:

- Nearly Unlimited Energy: In Low Earth Orbit (LEO), there are no clouds, no night (in certain orbits), and no atmosphere to filter the sun's power. Starcloud’s orbital facilities can harness a constant, high-irradiance solar energy supply, giving them access to virtually unlimited, low-cost renewable power. This dramatically slashes the operating costs and carbon footprint associated with energy generation.

- The Ultimate Heat Sink: Perhaps the coolest part (pun intended) is the cooling. The vacuum of deep space is an incredible, natural heat sink, sitting at near absolute zero, approx -270 C. Starcloud uses passive radiative cooling simply radiating the waste heat away into the cosmos eliminating the need for massive, water-guzzling chiller systems entirely. The long-term vision aims to cut total lifecycle carbon emissions by a factor of ten over terrestrial centers.

The Next Giant Leap: NVIDIA H100 in Orbit

This space-based cloud is about to get a serious power upgrade. Starcloud’s upcoming demonstrator satellite is set to feature the NVIDIA H100 GPU, a flagship AI accelerator known for its massive performance. This move is a powerful leap for space-based computing, promising 100 times more powerful AI compute than has ever been operated in orbit before.

While the technical hurdles like radiation hardening and ensuring connectivity via high-speed laser links are substantial, the reward is an infinitely scalable, hyper-efficient, and sustainable compute platform.

This isn't a replacement for all data centers yet, but it’s a brilliant idea for energy-intensive, latency-tolerant workloads. Starcloud is essentially positioning orbital data centers as the only path to a sustainable gigawatt-scale AI future, finally allowing our models to grow without suffocating our planet. It’s a bold vision, but given the trajectory of AI, we might all be running our next massive model in the clouds, the literal ones, that is.

Inspire Others – Share Now

Agentic AI Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own AI Agents

EV

Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own vehicle

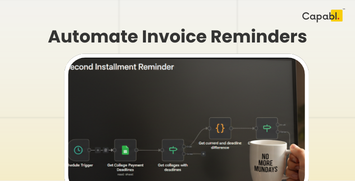

Agentic AI LeadCamp

From AI User to AI Agent Builder — Capabl empowers non-coding professionals to ride the AI wave in just 4 days.

Agentic AI MasterCamp

A complete deployment ready program for Developers, Freelancers & Product Managers to be Agentic AI professionals

.png)