1. Introduction: The Paradigm Shift from Tools to Partners

The current landscape of generative AI, dominated by Large Language Models (LLMs) like GPT-4, is largely reactive. A user provides a prompt; the model provides a completion. This interaction, while powerful, is a single-turn dialogue with a stateless entity. It lacks persistence, autonomy, and the ability to effect change in the external world.

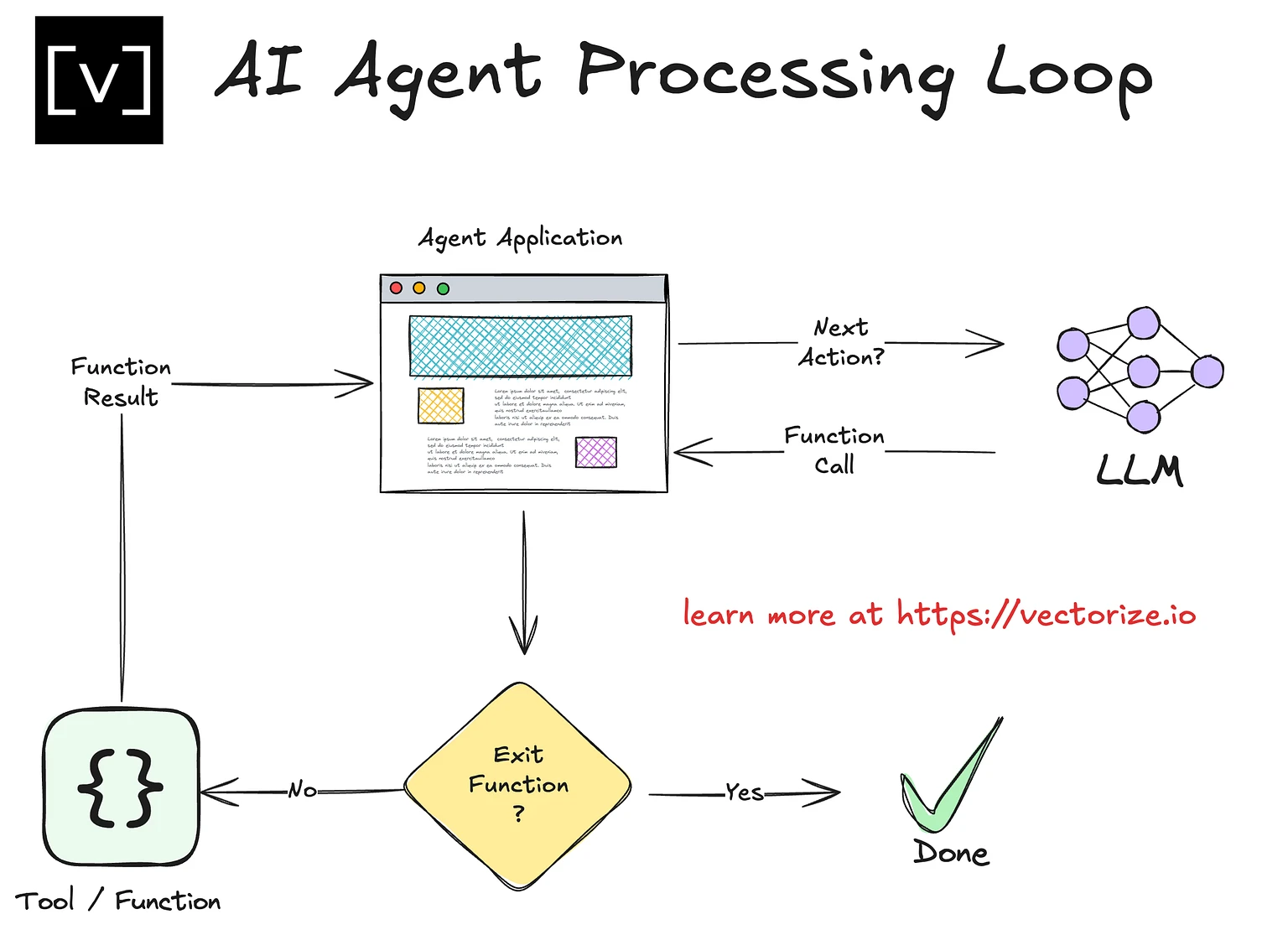

AI Agents shatter this paradigm. An AI Agent is an integrated system that uses an LLM as its core reasoning engine to:

- Perceive a high-level, often complex, goal from a user.

- Plan a sequence of actions to achieve that goal.

- Execute those actions by leveraging a suite of tools (APIs, functions, code execution).

- Observe the outcomes and iterate on the plan recursively until the goal is met or a termination condition is reached.

In essence, an AI Agent is a meta-cognitive framework that transforms a powerful but limited LLM into an autonomous, goal-directed cognitive entity. This marks the transition from using AI as a tool to collaborating with AI as a partner.

2. The Core Architectural Components of an AI Agent

The power of an agent emerges from the sophisticated interplay of four core components. Understanding each is crucial to grasping how agents function.

2.1. The Reasoning Engine (The "Brain")

At the heart of every agent is a Large Language Model (LLM). Its primary role is not to provide final answers, but to serve as a universal reasoning engine and task planner.

- Function: The LLM interprets the user's goal, breaks it down into a logical sequence of sub-tasks (planning), and decides which tool to use at each step (orchestration).

- Key Consideration: While powerful models like GPT-4 Turbo are common, more specialized, smaller models fine-tuned for reasoning and instruction-following (e.g., Claude 3 Opus, Mixtral 8x22B) are often more efficient and reliable for agentic workflows. The choice of LLM directly impacts the agent's planning accuracy and cost-effectiveness.

2.2. The Tool Set (The "Hands and Senses")

Tools are the interfaces through which an agent perceives and interacts with the world. They are functions that extend the agent's capabilities beyond its parametric knowledge.

- Categories of Tools:

- Information Retrieval:

web_search(query),query_database(sql_query),read_file(path). - Computation & Code:

execute_python(code),execute_javascript(code),run_shell_command(command). - Software & API Interaction:

send_email(to, subject, body),create_calendar_event(details),call_rest_api(endpoint, payload). - Sensory Input:

transcribe_audio(file),analyze_image(image_url).

- Information Retrieval:

- Implementation: Tools are typically implemented as functions with well-defined schemas (name, description, parameters) that are passed to the LLM, allowing it to understand when and how to call them.

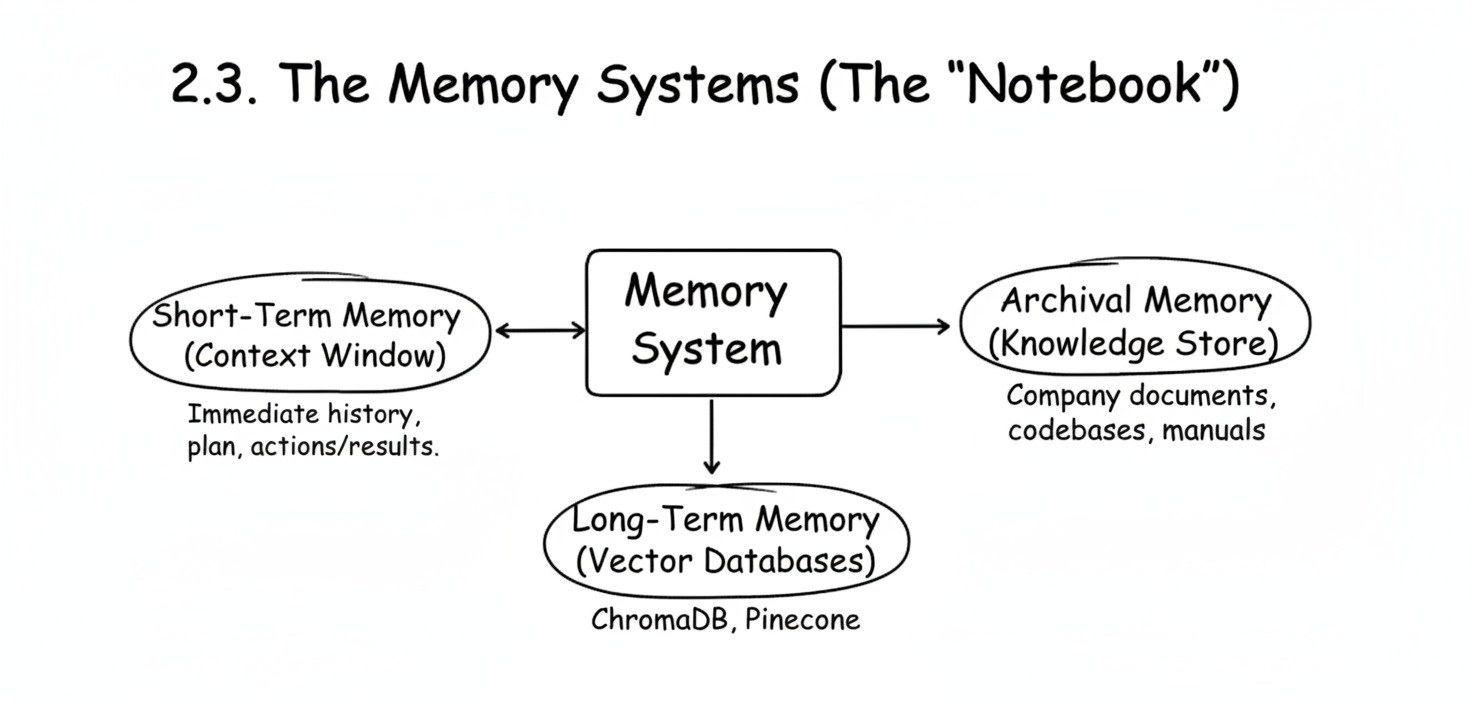

2.3. The Memory Systems (The "Notebook")

Memory is what gives an agent context and continuity, preventing it from being trapped in a single prompt context window. It operates on multiple levels:

- Short-Term Memory (Context Window): The immediate history of the current task: the plan, previous actions, and their results. This is managed within the context of the LLM call.

- Long-Term Memory (Vector Databases): For tasks that exceed the context window or need persistence across sessions, agents write and read from external vector databases (e.g., ChromaDB, Pinecone). This allows them to "remember" past interactions, user preferences, and learned information.

- Archival Memory (Knowledge Store): A vast, pre-loaded database of company documents, codebases, or manuals that the agent can search through using semantic search to inform its actions.

2.4. The Agentic Loop: The "Think-Act-Observe" Cycle

The agent's operational logic is a recursive loop, most commonly implemented using frameworks like ReAct (Reason + Act). This loop is the engine of its autonomy.

# A PSEUDOCODE illustration of the ReAct loop

def run_agent(user_goal, tools, memory):

task_complete = False

max_iterations = 10

current_plan = []

while not task_complete and max_iterations > 0:

# STEP 1: THINK - Reason about the current state and decide next action

prompt = f"""

Goal: {user_goal}

Current Plan: {current_plan}

Memory: {memory}

Available Tools: {list_tools(tools)}

What is the next logical step? Respond with THOUGHT: [your reasoning] then ACTION: [tool_name({params})]

"""

llm_response = llm.generate(prompt)

# Example LLM output: "THOUGHT: I need to find the user's current location to get the weather. ACTION: get_user_location()"

# STEP 2: ACT - Parse and execute the chosen action

action, params = parse_response(llm_response)

if action in tools:

result = tools[action](params) # e.g., Calls the `get_user_location` tool

else:

result = f"Error: Tool {action} not found."# STEP 3: OBSERVE - Update memory and assess progress

memory.update({'thought': llm_response, 'action': action, 'result': result})

current_plan.append(f"Executed {action} with result: {result}")

# Check if goal is met or a new sub-task is identified

if is_goal_achieved(result, user_goal):

task_complete = True

else:

max_iterations -= 1 # Prevent infinite loops

return {"status": "success" if task_complete else "incomplete", "summary": memory}This loop continues until the goal is satisfied, a terminal condition is met, or a safety limit is reached.

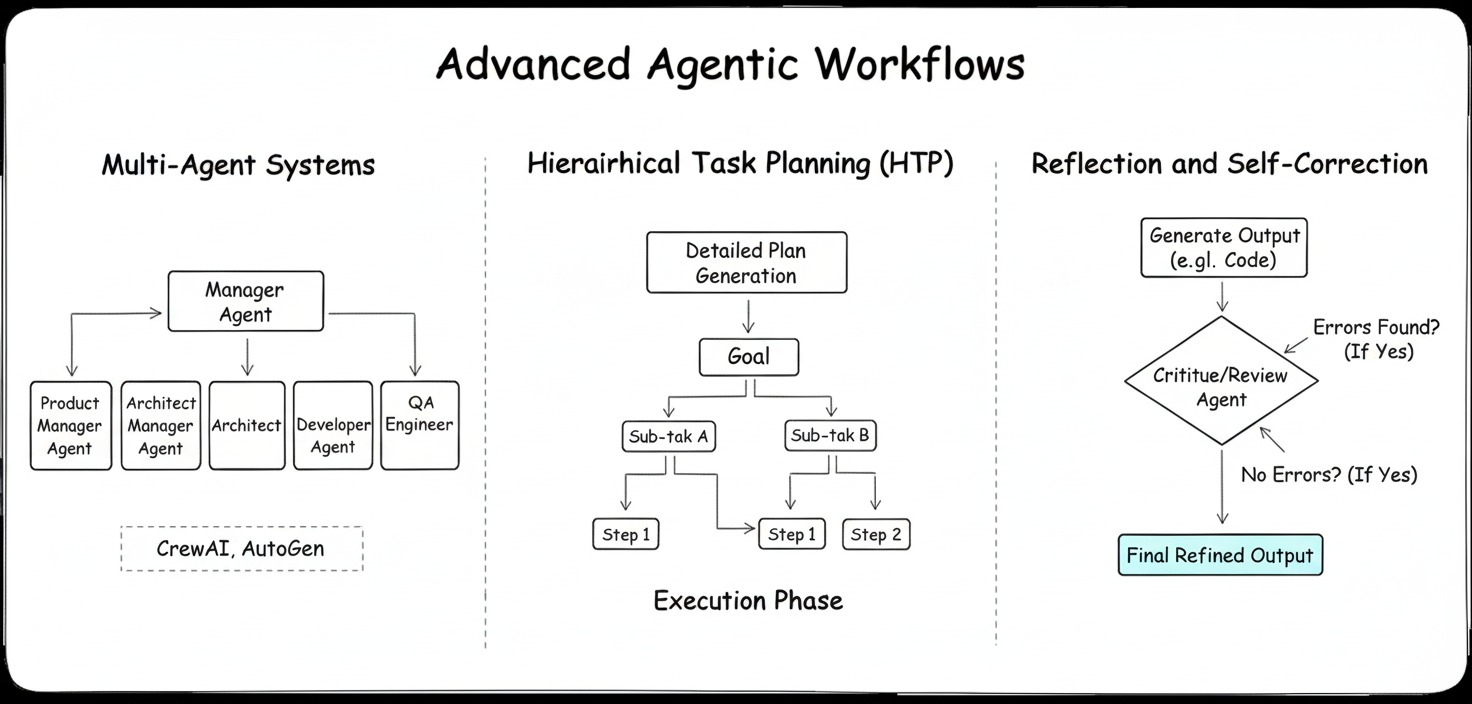

3. Advanced Agent Architectures: Beyond Simple ReAct

While ReAct is a foundational pattern, complex problems require more sophisticated architectures:

- Multi-Agent Systems: Different specialized agents work together, coordinated by a "manager" agent.

- Example: A software development team might consist of a "Product Manager" agent that breaks down a feature request, a "Architect" agent that designs the solution, a "Developer" agent that writes the code, and a "QA Engineer" agent that tests it. Frameworks like CrewAI and AutoGen are designed for this.

- Hierarchical Task Planning (HTP): The agent first creates a detailed, hierarchical tree of tasks and sub-tasks (a "plan") before executing any action. This is more robust than ReAct's step-by-step planning for highly complex goals.

- Reflection and Self-Correction: Advanced agents can critique their own work. After generating an output (e.g., a piece of code), a separate "critic" agent (or the same agent in a different role) reviews it for errors, improving quality without human intervention.

4. Real-World Applications & Use Cases

The theoretical framework translates into powerful, practical applications across industries:

- Software Engineering: Devin (by Cognition AI) and SWE-Agent are pioneering this space. They can autonomously traverse codebases, understand bugs, write patches, test them, and submit pull requests. A 2024 study by Stanford researchers showed SWE-Agent successfully resolving 12.29% of issues on the SWE-bench benchmark, a significant step forward in fully automated coding.

- Scientific Research: Agents are being used to automate the scientific method. They can hypothesize ("Based on this medical paper, drug X might interact with gene Y"), plan experiments (simulate the interaction using a bioinformatics tool), analyze results, and write a summary of findings.

- Enterprise Automation: Adept AI is focused on building agents that can navigate any software UI. Imagine an agent that can be told "Onboard the new hire, Sarah, in the Workday system," and it can log in, click through menus, fill out forms, and complete the process without any API integration required.

- Personal Intelligence: Projects like OpenAI's GPTs and Microsoft's Copilot Studio are democratizing agent creation, allowing users to build custom agents that can interact with their personal data (emails, documents, calendars) to perform tasks like scheduling, research synthesis, and personalized content creation.

5. Challenges and the Path Forward

Despite the excitement, building reliable agents is fraught with challenges:

- Reliability & Hallucination: An agent might decide on a logical but incorrect or non-existent action. Robust validation and error handling are critical.

- Cost and Latency: Each step in the ReAct loop requires an LLM call, leading to high costs and slow performance for complex tasks. Optimizing planning efficiency is a key research area.

- Security: Granting an agent the ability to execute code and call APIs introduces significant security risks. Sandboxed environments and strict permission controls are non-negotiable.

- Evaluation: How do you objectively measure the performance of an autonomous agent? New benchmarking suites (e.g., AgentBench) are emerging to test agents on tasks like web navigation, coding, and reasoning.

The near future will focus on overcoming these hurdles through better reasoning models, more efficient planning algorithms, and safer execution environments.

6. Building Your First Agent: A Practical Tutorial

Let's move beyond theory. Here’s how to build a simple web research agent using LangChain, a popular framework for orchestrating agentic workflows.

# Requires: pip install langchain langchain-openai langchain-community duckduckgo-search

import os

from langchain.agents import AgentType, initialize_agent, load_tools

from langchain_openai import OpenAI

from langchain.chains import LLMChain

from langchain.memory import ConversationBufferWindowMemory

# 1. Set your API Keys (use environment variables for security!)

os.environ["OPENAI_API_KEY"] = "your_openai_key_here"

# 2. Initialize the LLM and Tools

llm = OpenAI(model="gpt-3.5-turbo-instruct", temperature=0) # Use a cheaper, faster model for reasoning

tools = load_tools(["ddg-search", "requests_all"], llm=llm) # Gives it web search and API call abilities

# 3. Add memory to keep track of the conversation and plan

memory = ConversationBufferWindowMemory(k=5, memory_key="chat_history", return_messages=True)

# 4. Initialize the Agent with a ReAct framework

agent = initialize_agent(

tools,

llm,

agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION, # A robust ReAct variant

verbose=True, # Essential for debugging: see the agent's thoughts

memory=memory,

handle_parsing_errors=True # Prevents crashes on minor output parsing issues

)

# 5. Run the Agent with a complex, multi-step query

try:

result = agent.run(

"Research the latest news on SpaceX's Starship flight tests in 2024. "

"Find out the key objectives of their most recent test and whether it was considered a success. "

"Then, summarize the findings in a brief paragraph."

)

print(f"\nFINAL RESULT:\n{result}")

except Exception as e:

print(f"An error occurred: {e}")Running this code will show a verbose output of the agent's Thought -> Action -> Observation cycle, providing a clear window into its cognitive process.

7. Curated Resources for Deep Exploration

To move from tutorial to production, explore these foundational resources and frameworks:

- LangChain Agent Documentation: The best starting point. Its

AgentandToolmodules provide the essential abstractions.- Link: https://python.langchain.com/docs/modules/agents/

- Why: Excellent tutorials, multiple agent types (ReAct, Plan-and-Execute), and extensive tool integrations.

- AutoGPT (Significant-Gravitas): The project that catalyzed the agent space. It's a full-featured, general-purpose agent application.

- Link: https://github.com/Significant-Gravitas/AutoGPT

- Why: A real-world example of an agent with long-term memory (via vector databases), web UI, and file operations. Study its architecture to understand scale.

- CrewAI: A powerful framework for building multi-agent systems where different agents play different roles and collaborate.

- Link: https://github.com/joaomdmoura/crewai

- Why: Represents the next evolution past single agents. Perfect for modeling complex workflows like content creation teams or software development pods.

- SWE-Agent: A state-of-the-art, open-source agent designed specifically for solving real-world GitHub issues.

- Link: https://github.com/princeton-nlp/SWE-agent

- Why: It demonstrates how specializing an agent's tools and prompts for a specific domain (software engineering) dramatically increases its performance. A masterclass in domain-specific agent design.

Stop Prompting. Start Programming.

You've mastered the shift from a reactive tool to an autonomous AI Agent Partner. You understand the ReAct loop, the power of Tool Sets, and the necessity of Memory Systems for goal-directed AI. You know the theory behind agents that can write code and automate science.

It is time to move from the theory of Thought → Action → Observation to actually building and deploying these complex, multi step systems.

The AI Agent Mastercamp by Capabl is an intensive, hands-on program designed specifically to turn your architectural knowledge into deployable reality. You will learn to:

- Build the Agentic Loop using frameworks like LangChain.

- Implement Tool Augmentation to give your agents the power to interact with APIs and code execution environments.

- Design Multi Agent Systems for complex enterprise automation and team based workflows.

Your career is shifting from being an AI user to being an AI architect.

Ready to build the future of autonomous computing?

Enroll in the Agentic AI Mastercamp Today!🔗

Conclusion: The Age of Agentic Computing

The development of AI Agents is not merely an incremental improvement in AI; it is a foundational shift in human-computer interaction. We are moving from a paradigm of direct manipulation (where we command tools) to one of delegated goals (where we collaborate with partners).

This transition will redefine roles across industries, automating complex cognitive workflows and amplifying human potential in ways we are only beginning to imagine. The architects of this future will be those who understand not just how to use AI models, but how to orchestrate them into intelligent, reliable, and purposeful systems. The journey begins with understanding the core components, the brain, the hands, the memory, and the loop that bring these digital partners to life.

Inspire Others – Share Now

Agentic AI Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own AI Agents

EV

Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own vehicle

Agentic AI LeadCamp

From AI User to AI Agent Builder — Capabl empowers non-coding professionals to ride the AI wave in just 4 days.

Agentic AI MasterCamp

A complete deployment ready program for Developers, Freelancers & Product Managers to be Agentic AI professionals