Introduction: The Rise of Agentic AI

The conversation around Large Language Models has shifted dramatically over the past couple of years. We are no longer just marveling at their ability to generate coherent text; the real excitement now lies in their capability to become autonomous agents. This transition moves the LLM from being a sophisticated completion engine into a dynamic, problem solving entity that can act on its own behalf. It’s the difference between asking an LLM to write a search query and having it execute a multi step research project that involves searching, synthesizing, and then writing a final report. This leap into autonomy isn't accidental or simply a result of larger models; it is rooted in fundamental architectural design choices. To make an LLM reliable in the real world a world full of external tools, APIs, and uncertain outcomes we need specialized blueprints. That's where agentic design patterns come in, offering structure to the often chaotic process of turning thought into action.

Understanding Agentic Design Patterns

Agentic design patterns are essentially formalized workflows that govern how an LLM decides what to do next. Think of them as the operating system for an AI agent. In traditional software engineering we use patterns like Singleton or Factory to manage objects and structure code. In agentic AI, these patterns address the inherent challenges of non-determinism, tool usage, and iterative refinement. They provide a predictable, repeatable structure to the decision making loop, helping the agent to: first, reason through a complex goal; second, act on that reasoning by calling a specific tool; and third, observe the result to inform its subsequent actions. Without these patterns, the agent's behavior would be almost impossible to debug or scale. It would rely purely on the model's internal emergent abilities which, frankly, are often not robust enough for production environments. These patterns give developers the controls necessary to manage cost, latency, and accuracy, making agentic systems viable for enterprise use.

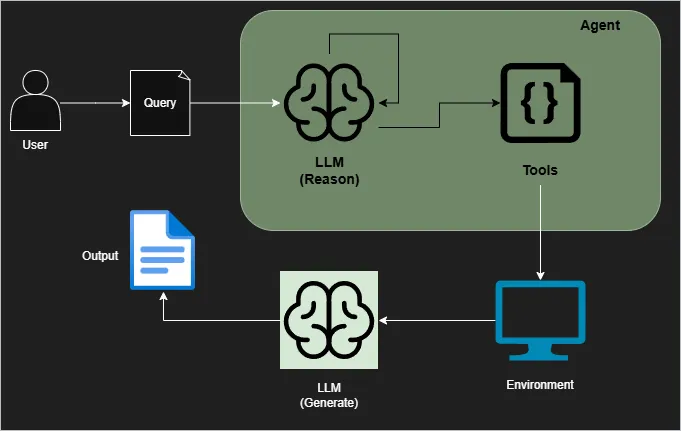

The ReAct Pattern: Reasoning Meets Action

The ReAct pattern, a portmanteau for Reasoning and Acting, is perhaps the most famous and foundational design in the agentic world. It was a breakthrough because it elegantly combined the strengths of Chain of Thought CoT reasoning with external tool usage. Before ReAct, approaches often separated thinking from doing. You had CoT for logic puzzles and models that could call tools for information retrieval, but they rarely intertwined these abilities dynamically.

ReAct changed that by enforcing a continuous, tight feedback loop: Thought, Action, Observation.

First, the agent generates a Thought. This is the CoT component where the LLM explicitly breaks down the current task, assesses the information it has, and determines the very next necessary step. This verbalized thought process is critical; it externalizes the agent’s logic making the system incredibly explainable and debuggable. Next, based on that thought, the agent executes an Action. This action is a structured call to an external tool such as a search engine, a calculator, or a file management API. Finally, the system feeds the result of the action back to the LLM as an Observation. If the action was a web search, the observation is the search snippet. If it was a failed API call, the observation is the error message. The LLM then uses this fresh observation to formulate its next Thought, effectively closing the loop. It iterates through this cycle until it reaches a conclusive answer or hits an exit condition.

From an implementation perspective, ReAct is marvelous for tasks requiring high adaptability. Imagine a customer service agent navigating a complex, proprietary knowledge base. It can search, observe a result, realize the result is insufficient, search again with a refined query, and then synthesize the final answer. This real time improvisation, supported by frameworks like LangGraph which explicitly model these stateful cycles, makes ReAct the workhorse for dynamic, interactive applications. The primary drawback, one we must be honest about, is cost and latency. All that explicit CoT logging means more tokens are used, and the sequential nature of the thought action observation steps inherently slows down the overall response time.

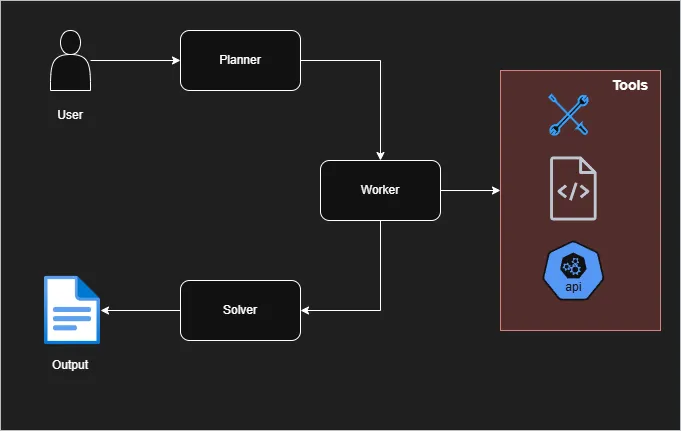

The ReWOO Pattern: ReAct Without Observation

As agents grew more popular and cost became a key constraint, researchers sought ways to achieve complex execution without the heavy iterative overhead of ReAct. This led to ReWOO, which stands for Reasoning WithOut Observation. The name tells the story: it eliminates the observation loop during the execution phase, trading real time improvisation for upfront efficiency.

ReWOO completely decouples the reasoning from the execution into three distinct phases: Planner, Worker, and Solver.

In the first phase, the Planner LLM takes the initial user query and performs all its complex reasoning at once. It generates a comprehensive, multi step plan that identifies all necessary information retrieval tasks, but it does not execute them. Instead, it embeds abstract placeholders for the data it needs. For example, the plan might say: "Step 1: Retrieve the 2024 revenue for Company X [ID: Search1]. Step 2: Retrieve the industry growth rate for the last five years [ID: Search2]. Step 3: Synthesize the final report using [Search1] and [Search2]." The Planner is an expensive but single LLM call. The second phase involves the Worker modules. These are the deterministic tool calls. Crucially, because the Planner has already laid out all the steps and identified the inputs, these Worker tasks can be executed in parallel. A search for revenue and a search for growth rate can happen simultaneously. There is no LLM reasoning between the tool calls; the workers simply execute the deterministic I/O. Finally, the Solver LLM takes the original plan and all the raw, parallel outputs from the Workers. It then synthesizes a final, cohesive answer by slotting the retrieved data into the pre determined structure.

The genius of ReWOO is its efficiency and low cost. By eliminating the internal monologue (the Thought steps) during tool execution, it drastically reduces token usage, often by five to ten times compared to a complex ReAct trace. It is the perfect pattern for structured, repeatable workflows, like batch data analysis, complex report generation where data sources are known, or any high throughput application where speed is paramount. The major trade off, and this is a critical one, is fragility. If the initial plan generated by the Planner is flawed, or if a Worker returns an unexpected error, the agent has no built in mechanism to self correct or pivot. The original plan is fixed, often leading to a hard failure or a suboptimal result. You must use ReWOO when you have high confidence in the predictability of the task environment.

The CodeAct Pattern: When the Tool Needs to Be Built

Think of it this way: ReAct makes smart use of the tools on the workbench, and ReWOO draws up a perfect schedule for using them. But CodeAct? That’s the agent who can actually design and build a new, temporary tool right there on the spot. This is what truly transforms an LLM into a computational powerhouse it’s no longer limited by a fixed set of APIs but can generate novel computation to solve problems involving complex math, data reshaping, or obscure system interactions.

The core mechanism here is a highly technical, self correcting loop that’s a direct descendant of ReAct: Reason, Code, Execute, Observe/Debug.

When facing a request like "Calculate the 95th percentile of this sales data and visualize the distribution," the agent's first Reasoning step concludes that this task requires custom programmatic logic. It can't just call a single search API. So, it generates executable code, often Python or SQL, specifically tailored to the current data and goal. This code is instantly sent off to a secure, sandboxed interpreter for execution. The output of that interpreter is the agent's Observation.

This is where the magic of self debugging happens. If the code is buggy, it throws a KeyError because the agent misspelled a column name, or a SyntaxError, the entire traceback is returned as the observation. The agent doesn’t panic; its next Thought is automatically to act as a seasoned programmer: "Okay, the system hit a KeyError on line 12. I need to fix the indexing logic and try again." This iterative, self correction process allows CodeAct agents to tackle genuinely novel problems and complex algorithmic challenges without human intervention. This capability is absolutely vital for advanced coding assistants and autonomous data analysis engines today. The caveat, and please pay close attention here, is security. Running arbitrary code from an LLM is inherently dangerous, so the execution environment must be absolutely locked down and sandboxed to prevent any malicious or resource intensive code from impacting the host system. It’s a powerful but risk sensitive approach.

Honorable Mentions: Other Emerging Patterns

Beyond the core thinkers and doers, ReAct, ReWOO, and CodeAct, the field has birthed several specialized patterns that focus on quality, strategy, and teamwork. These aren't always complete architectures, but they are critical extensions that make agents production ready.

First, let's talk about Reflection (or Reflexion). We've all had that moment after hitting 'send' where we reread an email and spot a typo or a factual error. Reflection is just that, but for the agent. It adds a crucial meta cognitive loop where the agent is prompted to critically review its own generated output or its recent actions against a predefined set of quality standards or success metrics. This extra LLM pass, executed after the main task is finished but before the answer is delivered, is surprisingly effective. It acts as an automated editorial gate, slashing hallucination rates and significantly boosting the perceived quality of the final output. It's truly essential for any high stakes application.

Then there is Tree of Thought (ToT). Where Chain of Thought (CoT), used in ReAct, forces the agent to commit to a single linear path of reasoning, ToT allows for the exploration of multiple plausible thought branches simultaneously. Imagine playing a complex game of chess: you don't just consider one move; you consider several, exploring the consequences of each before selecting the most promising line of play. ToT does this for reasoning. It's computationally more expensive, definitely, but it’s a brilliant fit for tasks requiring complex strategic planning, creative brainstorming, or scenarios where the best path isn't obvious upfront.

Finally, we have Multi Agent Collaboration. I hesitate to call this a single pattern because it's really an architecture built around specialization and communication. The idea is simple: complex problems are best solved by a team, not a single genius. You define multiple specialized agents, perhaps a Researcher to gather data, a Critic to audit sources, and a Writer to synthesize the report and orchestrate their conversation. Frameworks like AutoGen excel at facilitating these sophisticated conversational dialogues, allowing developers to map complex organizational workflows directly onto a digital team of agents. This collaborative approach often leads to higher quality and more reliable outcomes by leveraging role specific expertise and distributed effort.

Comparative Overview of Key Patterns

Designing Hybrid Agent Systems

In a professional setting, we rarely deploy a pure ReAct or a pure ReWOO agent. The most powerful and reliable systems are hybrids that leverage the strengths of multiple patterns across a complex workflow. This is where agentic engineering becomes a true discipline.

Imagine a complex task: "Analyze the last quarter's stock performance for three different tech companies and explain the discrepancy in their market cap growth." This task is too complex for a single pattern. A robust hybrid system might work like this:

- Initial Triage: The primary coordinator agent recognizes the request involves structured data retrieval for three companies. It employs a ReWOO Planner to quickly and efficiently define parallel search and API calls to fetch the three separate data sets (low latency, high throughput for a predictable step).

- Data Processing: Once the raw data is retrieved, the coordinator spins up a CodeAct Agent. This agent is instructed to perform all the necessary cleaning, statistical analysis, and visualization generation using code. If the code fails due to missing data, the CodeAct loop allows it to self correct or explicitly prompt for a missing tool.

- Synthesis and Interpretation: The analyzed outputs are then passed to a ReAct Agent. This agent's task is inherently ambiguous: "explain the discrepancy." It might use the initial analysis, observe a key difference, and then spontaneously decide to perform a new, targeted web search to find news articles or analyst reports to contextualize the financial data (high adaptability for an unpredictable step).

- Final Quality Gate: Before sending the final report to the user, the entire output is passed through a Reflection layer, which critiques the language, factual citations, and overall coherence.

This tiered approach, often implemented using frameworks that support graphical state machines like LangGraph, is what enables agents to move from simple proof of concepts to production grade systems that can handle real world ambiguity and complexity without crashing or hallucinating. We are composing specialized components, not just relying on a general model to do everything.

Implementation Tips and Frameworks

Moving these design patterns from theory into working code requires robust orchestration frameworks. These tools abstract away the low level plumbing and allow developers to focus on the high level agent logic.

LangChain and LangGraph offer perhaps the most popular and versatile toolkit. While LangChain provides the foundational components like tool wrappers, memory management, and model integration, it’s LangGraph that truly enables these agentic patterns. LangGraph allows you to define the agent's workflow as a directed acyclic graph DAG or, crucially, a cyclical graph. This capability is what explicitly models the Thought-Action-Observation loop of ReAct or the self correction loop of CodeAct. By defining the state transitions clearly, you gain total visibility into the agent’s execution path, solving one of the biggest debugging headaches in agentic AI.

AutoGen, a framework from Microsoft, offers an alternative perspective by focusing on the Multi Agent Collaboration pattern. Instead of defining a single ReAct loop, AutoGen’s core philosophy is that complex problems are best solved by a team of agents with specialized roles communicating with each other. This is incredibly effective for implementing ReWOO style parallelism where a planning agent delegates tasks to specialized worker agents, managing the conversation and synthesis in a chat based environment.

Finally, while DSPy is not an agent framework itself, it is becoming indispensable for making the reasoning components in all these patterns reliable. DSPy focuses on programmatic prompt optimization. Rather than manually fine tuning a ReAct prompt for weeks, you define the high level inputs and outputs, and DSPy essentially compiles and optimizes the prompt, often generating the necessary CoT structure or few shot examples automatically. Integrating DSPy into the LLM calls of any ReAct, ReWOO Planner, or CodeAct Debugging step dramatically boosts the accuracy and stability of the underlying LLM reasoning, ensuring that your agent’s Thought is actually effective.

To build reliable agents, I always recommend starting by clearly defining the Tool Interface. Poorly defined tool descriptions are the leading cause of agent failure. Treat the tool definition as if you were writing highly critical documentation for a junior developer; clarity, examples, and precise input schemas are paramount.

Conclusion: Towards a Pattern Language for AI Agents

The journey from conversational AI to autonomous agent marks a genuine paradigm shift in software development. We are witnessing the birth of a new engineering discipline. The foundational concepts of ReAct, ReWOO, and CodeAct are not just research curiosities; they are the architectural bedrock of reliable, scalable AI systems. They are the first definitive words in what will soon be a comprehensive Pattern Language for Agentic AI.

Master the Agent Revolution: Ready to build the future of autonomous systems? Join the Agentic AI Mastercamp to dive deep into frameworks like LangChain, LangGraph and MCP, mastering production ready patterns like ReAct with hands on Python. Alternatively, elevate your strategy with the Agentic AI LeadCamp (No Code). Learn to design, orchestrate, and manage high performance agent teams for rapid business automation and workflow optimization, all without writing a single line of code. Choose your path to lead the AI transformation!

Inspire Others – Share Now

Agentic AI Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own AI Agents

EV

Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own vehicle

Agentic AI LeadCamp

From AI User to AI Agent Builder — Capabl empowers non-coding professionals to ride the AI wave in just 4 days.

Agentic AI MasterCamp

A complete deployment ready program for Developers, Freelancers & Product Managers to be Agentic AI professionals