What is Prompt Engineering? The Foundation of AI Communication

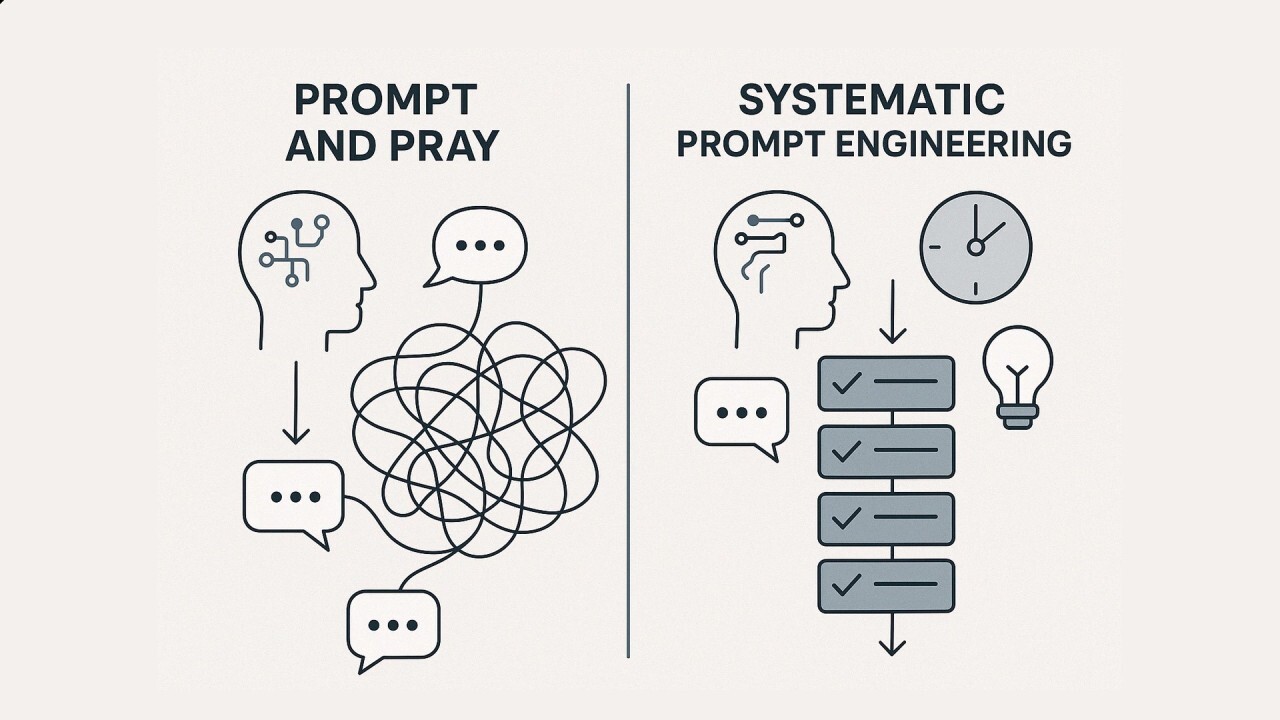

At its core, interacting with AI is a conversation. But unlike human conversations, AI requires explicit, well-structured guidance to understand our intent and deliver the desired output. This is the domain of prompt engineering.

Defining the Core: Prompt Engineering, AI Communication, and LLM Prompts

Let's establish a clear vocabulary:

- Prompt Engineering: This is the discipline of designing and optimizing inputs (prompts) to effectively guide Large Language Models (LLMs) and other AI systems to produce accurate, relevant, and high-quality outputs. As Google's "Whitepaper on Prompt Engineering" states, it's "the process of designing high-quality prompts that guide LLMs to produce accurate outputs. This process involves tinkering to find the best prompt, optimizing prompt length, and evaluating a prompt's writing style and structure in relation to the task"[2].

- AI Communication: This broader concept encompasses all forms of interaction between humans and artificial intelligence systems, including how we provide input, how AI processes it, and how it generates responses. It's about bridging the cognitive gap between human thought and machine logic.

- LLM Prompts: These are the specific textual inputs—questions, instructions, examples, or context—that you provide to a Large Language Model. They are the direct interface through which you communicate your needs to the AI.

In essence, prompt engineering is the method, LLM prompts are the specific inputs, and AI communication is the overarching interaction. Mastering the first is key to excelling at the latter two.

The 'Why': Importance of Prompt Engineering in the AI Era

Why has prompt engineering become so critically important? In an era where AI is rapidly integrating into every facet of our lives, the ability to communicate effectively with these powerful models is no longer a niche skill but a fundamental requirement.

Leading AI research institutions like OpenAI and Google AI have pioneered the development of LLMs, but their true utility is unlocked by skilled human interaction. Prompt engineering empowers users to:

- Unlock AI's Full Potential: Move beyond generic, surface-level responses to achieve highly specific, nuanced, and valuable outputs tailored to your exact needs.

- Improve Efficiency and Accuracy: Reduce the time spent on trial-and-error by crafting precise prompts that minimize misunderstandings and maximize the relevance of AI-generated content.

- Drive Innovation: By understanding how to effectively steer AI, you can push the boundaries of what these models can achieve, fostering creativity and solving complex problems.

- Bridge the Human-AI Gap: Ensure that AI systems truly understand human intent, leading to more intuitive and productive partnerships.

Think of it as learning the native tongue of AI. Without it, you're relying on a rudimentary phrasebook; with it, you can engage in deep, meaningful dialogue. For a foundational overview of this critical skill, explore the Texas Tech University Guide to Prompt Engineering.

Beginner's Tip: Start by thinking of prompt engineering as giving clear, specific instructions to a very smart but literal-minded assistant. The more precise you are, the better the results you'll get.

The Anatomy of an Effective LLM Prompt: Deconstructing for Maximum Impact

Just as a well-written essay has an introduction, body, and conclusion, an effective LLM prompt has specific components that, when combined, guide the AI to optimal performance. Understanding this "prompt anatomy" is crucial for crafting clear and impactful LLM prompts.

While the exact structure can vary, most high-performing prompts include some or all of the following elements:

- Instruction/Task: The core directive telling the AI what to do. This should be clear, concise, and unambiguous.

- Example: "Summarize the following text."

- Context/Background Information: Any relevant details the AI needs to understand the task and generate an appropriate response. This could include previous turns in a conversation, specific domain knowledge, or relevant data.

- Example: "The following text is a scientific abstract about quantum computing."

- Persona/Role: Assigning a specific role to the AI can significantly influence its tone, style, and perspective.

- Example: "Act as a seasoned cybersecurity analyst."

- Examples (Few-Shot Prompting): Providing one or more input-output examples helps the AI understand the desired format, style, and logic of the task. This is particularly powerful for complex or nuanced tasks.

- Example: "Input: 'Apple', Output: 'Fruit'. Input: 'Carrot', Output: 'Vegetable'. Input: 'Potato', Output: '..."

- Output Format/Constraints: Explicitly stating how you want the output structured (e.g., bullet points, JSON, a specific word count, a particular tone).

- Example: "Provide the summary in three bullet points, each no longer than 20 words. Use a formal tone."

- Guardrails/Negative Constraints: Specifying what the AI should not do or include.

- Example: "Do not include any personal opinions or speculative information."

A systematic survey of prompt engineering techniques by Schulhoff et al. highlights the importance of such structured approaches, establishing taxonomies of prompting techniques and a detailed vocabulary to enhance understanding and application[1]. By meticulously constructing your LLM prompt structure with these effective prompt components, you move closer to maximum impact and precise AI responses.

Mastering Clarity: Crafting Clear & Specific AI Prompts (Overcoming AI Misunderstandings)

One of the most common frustrations users face is AI misunderstanding prompts. This often stems from a lack of clarity, specificity, or context in the prompt itself. Mastering the art of crafting clear AI prompts is paramount to transforming ineffective interactions into productive partnerships.

The Clarity Compass: A Step-by-Step Framework for Prompt Creation

To guide your prompt writing, consider this structured methodology, a "Clarity Compass" for prompt creation framework:

- Define Your Objective Clearly: Before writing a single word, know exactly what you want the AI to achieve. What is the desired outcome? What problem are you solving? This helps in defining prompt objectives.

- Bad Objective: "Tell me about marketing."

- Good Objective: "Generate three innovative content marketing ideas for a B2B SaaS company selling project management software, targeting small to medium businesses."

- Provide Comprehensive Context: Give the AI all necessary background information. Who is the audience? What is the industry? What previous information should it consider?

- Specify the Output Format: Explicitly state how you want the response structured. Do you need a list, a paragraph, a table, or code?

- Set Clear Constraints: Define boundaries. How long should the response be? What tone should it adopt? Are there any forbidden topics or keywords?

- Iterate and Refine: Prompt engineering is rarely a one-shot process. Be prepared to test, evaluate the output, and refine your prompt based on the results.

Common Prompting Pitfalls and How to Avoid Them

Why does AI sometimes not understand my prompts? Often, it's due to common prompt mistakes that introduce ambiguity or vagueness. Here are some pitfalls and how to steer clear:

- Vagueness: Using general terms when specific ones are needed.

- Bad: "Write something about history."

- Good: "Write a 200-word summary of the causes of the French Revolution for a high school history class."

- Ambiguity: Phrases with multiple interpretations.

- Bad: "List the best ways to solve this." (What "this"? "Best" by what metric?)

- Good: "List three cost-effective strategies for reducing customer churn in a subscription service, focusing on retention rather than acquisition."

- Combining Too Many Queries: Asking for multiple, unrelated tasks in a single prompt can overwhelm the AI.

- Bad: "Write a poem about space, then summarize a news article, and also give me a recipe for cookies."

- Good: Break these into separate prompts.

- Lack of Persona/Role: Not giving the AI a role can lead to generic, uninspired responses.

- Bad: "Explain quantum physics."

- Good: "Explain quantum physics to a 10-year-old using simple analogies, acting as a friendly science teacher."

Here's an example of a poorly constructed prompt and its refined version, demonstrating how prompt refinement leads to better AI output:

# Poorly constructed prompt

poor_prompt = "Tell me about marketing strategies."

# Refined, clear prompt

refined_prompt = """

You are a Senior Marketing Consultant specializing in B2B SaaS.

Your task is to generate three actionable content marketing strategies for a new project management software targeting small to medium-sized businesses (SMBs).

For each strategy, provide:

1. A brief description of the strategy.

2. The primary goal it addresses (e.g., lead generation, brand awareness).

3. Two specific content ideas with headlines.

4. A suggested distribution channel.

Ensure the tone is professional and results-oriented.

"""Example Output for Refined Prompt:

Here are three actionable content marketing strategies for a new B2B SaaS project management software targeting SMBs:

Strategy 1: Educational Webinar Series

Description: Host a series of webinars demonstrating how the software solves common SMB project challenges.

Goal: Lead Generation, Product Education

Content Ideas:

- Headline: "Streamline Your Workflow: A 30-Minute Guide to Project Management for SMBs"

- Headline: "Beyond Spreadsheets: How Our PM Software Boosts SMB Productivity by 25%"

Distribution: LinkedIn Events, Targeted Email Campaigns, Industry Forums

Strategy 2: Problem-Solution Blog Series

Description: Publish a series of blog posts addressing specific pain points SMBs face in project management, positioning the software as the solution.

Goal: SEO, Thought Leadership, Lead Nurturing

Content Ideas:

- Headline: "The Hidden Costs of Manual Project Tracking for Small Businesses"

- Headline: "5 Ways Integrated Project Management Software Saves SMBs Time and Money"

Distribution: Company Blog, SEO Optimization, Social Media Sharing

Strategy 3: Client Success Stories & Case Studies

Description: Develop compelling case studies and success stories highlighting how existing SMB clients have benefited from the software.

Goal: Trust Building, Conversion, Social Proof

Content Ideas:

- Headline: "From Chaos to Clarity: How [Client Name] Transformed Project Delivery with Our Software"

- Headline: "Real Results: [Client Name]'s Journey to Efficient Project Management"

Distribution: Website (Dedicated Section), Sales Enablement Materials, Email MarketingProviding Context and Constraints: Guiding AI to the Right Answer

The more information and boundaries you provide, the better the AI can understand your intent. This is where prompt context and AI response constraints become invaluable.

- Explicit Context: Don't assume the AI knows what you know. If you're referring to a previous conversation point, explicitly state it or include the relevant snippet. If the topic is niche, provide a brief background.

- Using Delimiters: For multi-part prompts or when providing text for the AI to process, use clear delimiters (e.g., triple quotes """, XML tags <text>) to separate instructions from content. This helps the AI parse the prompt correctly.

- Example: "Summarize the following text, enclosed in triple quotes:

[Your long text here]"

- Example: "Summarize the following text, enclosed in triple quotes:

- Persona Setting: As seen in the example above, assigning a persona helps the AI adopt a specific tone and knowledge base, making its responses more relevant and engaging.

- Output Format Specifications: Always specify the desired output format. Do you need a list, a paragraph, a table, or JSON? This ensures the AI delivers information in a usable way.

Pro Tip: Create a "prompt template library" for common tasks you perform regularly. This saves time and ensures consistency in your AI interactions.

Prompt Engineering Best Practices for Seamless AI Communication & Interaction

Consolidating the knowledge gained, let's outline the prompt engineering best practices that form "The Prompt Engineer's Playbook" for optimal AI interaction. These guidelines will serve as your guide to communicating with large language models effectively, helping you resolve ineffective AI interactions and build trust.

The Prompt Engineer's Playbook: Core Principles for Optimal Interaction

- Be Explicit and Specific: Avoid ambiguity. State your instructions, context, and desired output format clearly. Use precise language.

- Do: "Generate a Python function that calculates the factorial of a number, including docstrings and type hints."

- Don't: "Write some Python code."

- Provide Ample Context: Give the AI all the necessary background information. Who is the audience? What is the purpose? What prior information should be considered?

- Do: "Summarize the attached meeting transcript for our CEO, highlighting key decisions and action items. The CEO prefers bullet points."

- Don't: "Summarize this meeting."

- Assign a Persona/Role: Guide the AI's tone and perspective by telling it who to act as.

- Do: "You are a friendly customer support agent. Respond to this user's query about a delayed delivery."

- Don't: "Answer this question about delivery."

- Use Delimiters for Clarity: When providing text for the AI to process, enclose it in clear separators like triple quotes, XML tags, or markdown code blocks.

- Do: "Analyze the sentiment of the following customer review:

'This product is terrible, it broke after one use!'" - Don't: "Analyze the sentiment of this customer review: This product is terrible, it broke after one use!"

- Do: "Analyze the sentiment of the following customer review:

- Break Down Complex Tasks: For multi-step problems, guide the AI through a Chain-of-Thought process or break the task into smaller, sequential prompts.

- Do: "First, identify the main arguments in the text. Second, evaluate the evidence for each argument. Third, synthesize a conclusion."

- Don't: "Critically analyze this text."

- Provide Examples (Few-Shot Prompting): Show the AI what you want by giving it input-output pairs. This is especially effective for specific formats or styles.

- Do: "Translate the following English phrases to French. Example: 'Hello' -> 'Bonjour'. 'Thank you' -> 'Merci'. 'Goodbye' -> 'Au revoir'."

- Don't: "Translate English to French."

- Specify Output Format and Constraints: Always tell the AI how you want the response structured (e.g., JSON, bullet points, specific length, tone).

- Do: "List five key benefits of cloud computing in a numbered list, each benefit no more than 15 words."

- Don't: "Tell me about cloud computing benefits."

- Iterate and Experiment: Prompt engineering is an iterative process. Test your prompts, analyze the output, and refine. What works for one model or task might not work for another.

Iterative Refinement: The Key to Continuous Improvement

Iterative prompt refinement is not just a best practice; it's the core methodology for improving AI output over time. Rarely will your first prompt yield perfect results, especially for complex tasks.

The process involves:

- Drafting: Write your initial prompt based on your objective and the core principles.

- Testing: Submit the prompt to the LLM.

- Evaluating: Critically assess the AI's response. Did it meet your objective? Was it accurate, relevant, and in the desired format? How do I refine an AI prompt based on its output? Look for specific areas where the AI deviated or fell short.

- Refining: Adjust your prompt based on the evaluation. This could mean adding more context, clarifying instructions, setting new constraints, or trying a different advanced technique.

- Repeating: Continue this cycle until you achieve the desired outcome.

This continuous prompt testing strategy ensures you are constantly learning and adapting your communication style to the AI's capabilities.

Building Trust: Ethical Considerations and Responsible AI Communication

Effective AI communication goes beyond technical proficiency; it also involves building trust with AI systems and practicing responsible AI prompting. As AI becomes more integrated, ethical considerations become paramount.

- Transparency: Be aware of the AI's limitations. LLMs can generate plausible but false information (hallucinations). Always critically evaluate AI outputs, especially for factual accuracy, and verify information from reliable sources.

- Bias Mitigation: LLMs are trained on vast datasets that reflect human biases. Be mindful of how your prompts might inadvertently elicit biased responses and actively work to mitigate them by specifying inclusive language or diverse perspectives. Research in natural language processing (NLP) and human-computer interaction (HCI) consistently highlights the importance of addressing bias to foster trust and ensure equitable AI interactions.

- Privacy: Be cautious about inputting sensitive or confidential information into public AI models, as the data might be used for further training.

- Over-Reliance: While powerful, AI is a tool. Avoid over-reliance on AI for critical decision-making without human oversight and validation.

- Explainability: When using advanced techniques, consider whether the AI's reasoning process is transparent enough for your needs. CoT prompting, for example, enhances explainability.

Schulhoff et al.'s "The Prompt Report" also provides best practices and guidelines for prompting state-of-the-art LLMs, including ethical considerations[1]. For further reading on systematic approaches to prompting and its implications, refer to the University of Maryland Survey on Prompting Techniques.

Community Resource: Join prompt engineering communities like the Prompt Engineering Discord to share techniques, get feedback, and stay updated on best practices.

The Future of Prompt Engineering: Human-AI Collaboration Evolved

The landscape of prompt engineering is dynamic, evolving as rapidly as AI technology itself. The future of prompt engineering points towards increasingly sophisticated and seamless human-AI collaboration.

Emerging trends include:

- Automated Prompt Optimization: Research is actively exploring ways to automate the process of prompt refinement. Instead of manual iteration, AI systems might eventually generate and test prompts themselves to achieve optimal results. Academic discussions on automatic prompt engineering are already surfacing in research papers, exploring optimization perspectives[7].

- Agent-Oriented AI Design: Future AI systems may operate as intelligent agents, capable of understanding high-level goals and then autonomously breaking them down, generating sub-prompts, executing actions, and self-correcting to achieve complex objectives. This moves beyond single-turn prompts to multi-agent systems.

- Multimodal Prompting: As AI capabilities expand beyond text to images, audio, and video, prompt engineering will evolve to incorporate multimodal inputs and outputs, allowing for richer and more intuitive interactions.

- Personalized AI Communication: LLMs will likely become even more adept at understanding individual user preferences, communication styles, and historical interactions, leading to highly personalized and context-aware responses without extensive explicit prompting.

- Democratization of Advanced Techniques: As advanced methods like CoT and ToT become more integrated into user-friendly interfaces, they will become accessible to a broader audience, further empowering non-technical users.

Ultimately, prompt engineering is not just about writing better instructions; it's about evolving how we think about and interact with intelligent systems. It's about forging a new kind of partnership, one where human creativity and AI's processing power combine to achieve unprecedented outcomes.

Start your prompt engineering journey today! Experiment with these techniques, join communities of practice, and continue learning as this exciting field evolves. The ability to communicate effectively with AI is becoming one of the most valuable skills in the digital age.

Additional Resources

Download Capabl’s Prompt Engineering Handbook (Free PDF)

Want to take all these insights with you, neatly packaged in one place? We’ve got you covered.

👉 Download the Complete Prompt Engineering Handbook Here

This free resource includes:

- Step-by-step techniques like Zero-Shot, Few-Shot, and Chain-of-Thought prompting

- Real-world examples for businesses, startups, and creators

- Advanced strategies like Meta-Prompting and Context Amplification

- Practical checklists to help you design effective prompts every single time

Whether you’re a student, marketer, entrepreneur, or just a curious AI enthusiast, this handbook is your go-to guide for mastering the art of AI conversations in 2025.

Elevate Your AI Expertise with the AI Agent Mastercamp

You've learned that prompt engineering is the key to clear AI communication, turning vague requests into valuable outputs. But what if you could move past simple prompts to building entire AI Agents that automate complex tasks and workflows?

That's exactly what the AI Agent Mastercamp by Capabl is designed for.

This isn't just another course on better prompts. This Mastercamp is an intensive, hands on program that teaches you how to design, develop, and deploy intelligent AI Agents. You’ll learn to leverage advanced AI capabilities to build tools that work autonomously, solving real world business problems. It's the future of professional automation.

Ready to stop just talking to AI and start building with it?

Enroll in the AI Agent Mastercamp today and become an AI builder!

Taking the Next Step: Your Journey to Advanced AI Mastery

Congratulations! You now have a solid foundation in the principles of systematic prompt engineering, from understanding the core components of an effective prompt to successfully implementing Zero shot, One shot, and Few shot methods. This mastery of the basics is the essential first step in truly unlocking the potential of Large Language Models. If you are ready to move beyond simply instructing the AI to building sophisticated, high performance AI Agents, your journey continues. We invite you to explore the next level of prompt engineering with frameworks designed for complex reasoning and dynamic problem solving.

Ready to Level Up?

Tools and Frameworks

- LangChain - Framework for developing applications with LLMs

- Guidance - Microsoft's guidance language for controlling LLMs

- PromptPerfect - Tool for optimizing and refining prompts

Communities

- AI Alignment Forum - Discussions on AI safety and alignment

- Capabl WhatsApp Group - Open Group on AI Usage and New Innovations in Agentic Space

Disclaimer: This content provides general information and guidance on prompt engineering. AI models and their behaviors are constantly evolving, and results may vary. Always test and validate AI outputs for accuracy and suitability for your specific use case. Ethical considerations regarding AI usage are paramount.

- References:

- Schulhoff, S., et al. (2024). The Prompt Report: A Systematic Survey of Prompt Engineering Techniques. arXiv preprint arXiv:2406.06608. Retrieved from https://arxiv.org/abs/2406.06608

- Google. (N.D.). Whitepaper on Prompt Engineering. Retrieved from https://archive.org/stream/whitepaper-prompt-engineering-v-4/whitepaper_Prompt Engineering_v4_djvu.txt

- Wei, J., Wang, X., Schuurmans, D., Bosma, M., Ichter, J., Xiong, F., ... & Chi, E. (2022). Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. arXiv preprint arXiv:2201.11903.

- Yao, S., Cui, D., Li, Q., Li, Y., Zhang, Z., & Sun, C. (2023). Tree of Thoughts: Deliberate Problem Solving with Large Language Models. arXiv preprint arXiv:2305.10601.

- Yao, S., Zhao, J., Yu, D., Du, N., Shafran, I., Narasimhan, K., & Cao, Y. (2023). ReAct: Synergizing Reasoning and Acting in Language Models. arXiv preprint arXiv:2304.03442.

- Akaike.ai. (N.D.). The State of AI in 2023: Key Trends and Insights.

- Li, W., Wang, X., Li, W., & Jin, B. (2025). A Survey of Automatic Prompt Engineering: An Optimization Perspective. arXiv preprint arXiv:2502.11560.

Inspire Others – Share Now

Agentic AI Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own AI Agents

EV

Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own vehicle

Agentic AI LeadCamp

From AI User to AI Agent Builder — Capabl empowers non-coding professionals to ride the AI wave in just 4 days.

Agentic AI MasterCamp

A complete deployment ready program for Developers, Freelancers & Product Managers to be Agentic AI professionals

Table of Contents

1) What is Prompt Engineering? The Foundation of AI Communication

2) Defining the Core: Prompt Engineering, AI Communication, and LLM Prompts

3) The 'Why': Importance of Prompt Engineering in the AI Era

4) The Anatomy of an Effective LLM Prompt: Deconstructing for Maximum Impact

5) Mastering Clarity: Crafting Clear & Specific AI Prompts (Overcoming AI Misunderstandings)

6) Common Prompting Pitfalls and How to Avoid Them

7) Prompt Engineering Best Practices for Seamless AI Communication & Interaction

8) Providing Context and Constraints: Guiding AI to the Right Answer

9) Iterative Refinement: The Key to Continuous Improvement

10) Building Trust: Ethical Considerations and Responsible AI Communication

11) Additional Resources