The Creativity Dial: Mastering Temperature in Large Language Models

In the rapidly evolving world of artificial intelligence, it’s easy to assume that the quality of an AI’s response depends mostly on the model itself—its size, its training data, or its architecture. But in practice, the difference between a stiff, robotic reply and a genuinely insightful or creative one often comes down to a single, overlooked parameter: temperature.

Temperature is commonly described as a “randomness setting,” but that description is both incomplete and misleading. Temperature does not simply inject chaos into the model’s output. Instead, it subtly reshapes how the model makes decisions. It determines whether the model behaves like a cautious analyst who always chooses the safest answer, or like an imaginative thinker willing to explore less obvious possibilities.

Understanding temperature is less about tweaking a number and more about understanding how language models choose words. Once you grasp that, temperature stops being a mysterious slider and becomes a powerful tool you can deliberately control.

How Language Models Choose Words

At its core, a large language model does not “write” sentences the way humans do. It predicts text one token at a time. For every step, it computes a probability distribution over all possible next tokens based on the prompt and the context so far.

For example, given the prompt:

“The sky is …”

The model might internally estimate probabilities like:

- “blue” as extremely likely

- “cloudy” as somewhat likely

- “dark” as context-dependent

- “falling” as grammatically valid but unusual

- “green” as technically possible but highly unlikely

Before a word is selected, the model converts internal scores (called logits) into probabilities using a mathematical function known as softmax. Temperature is applied at this exact moment. Each logit is divided by the temperature value before softmax is calculated.

This seemingly small mathematical adjustment has a dramatic effect:

- Lower temperature sharpens differences between probabilities.

- Higher temperature smooths them out.

As a result, temperature directly controls how strongly the model favors its top choice versus exploring alternatives.

What Temperature Really Means

It helps to think of temperature as a confidence amplifier or suppressor.

- When temperature is low, the model strongly trusts its most likely prediction.

- When temperature is high, the model becomes less confident and more exploratory.

At the extremes:

- As temperature approaches zero, the model behaves deterministically, always choosing the single most probable token.

- As temperature increases toward very large values, all tokens begin to look equally likely, which eventually leads to randomness.

This is why temperature does not change what the model knows. It only changes how strictly it follows its own beliefs.

The Temperature Spectrum in Practice

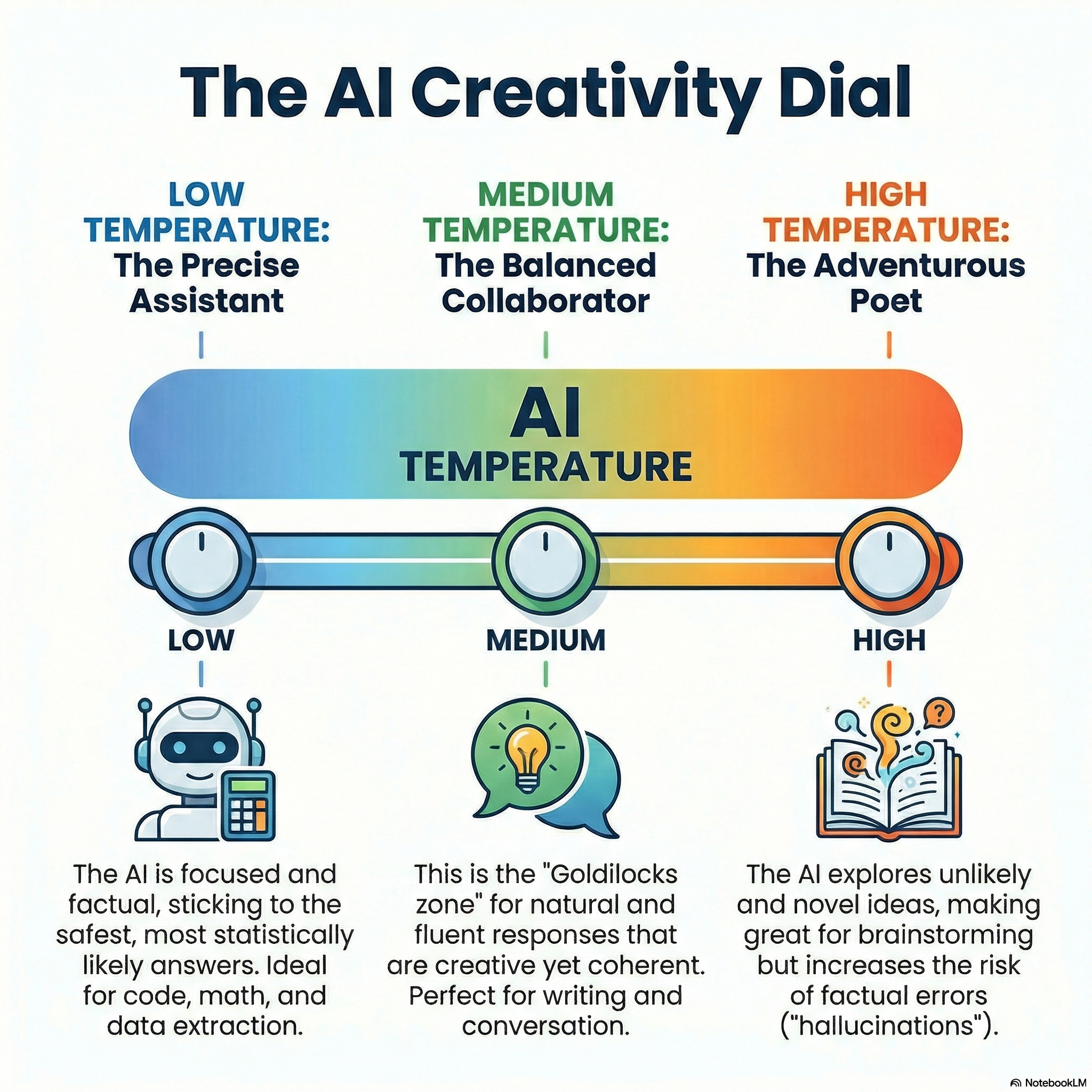

Different temperature ranges produce noticeably different personalities.

Low Temperature: Precision and Control (0.0 – 0.3)

At low temperatures, the model becomes conservative and predictable. It exaggerates the gap between the most likely token and all others.

What this feels like:

- Formal, structured responses

- Repetitive phrasing across runs

- Little variation in wording or style

This behavior is extremely useful when correctness matters more than expressiveness. Code generation, mathematical reasoning, structured data output, and configuration files all benefit from low temperature settings. In these contexts, creativity is not a feature—it is a liability.

A crucial technical detail is that a temperature of exactly 0.0 usually disables sampling altogether. Instead of drawing from a probability distribution, the model simply picks the highest-probability token every time. This is known as greedy decoding.

Medium Temperature: Natural and Balanced (~0.6 – 0.8)

This range is often called the “default” or “Goldilocks zone,” and for good reason. The model still favors strong predictions but allows itself to occasionally choose slightly less obvious words.

What this feels like:

- Natural conversational flow

- Variation in phrasing without loss of clarity

- Responses that feel human rather than mechanical

This temperature range works well for most everyday tasks: writing emails, explaining concepts, summarizing information, drafting blog posts, and engaging in dialogue. It balances fluency, coherence, and subtle creativity.

If you are unsure which temperature to use, this range is usually the safest starting point.

High Temperature: Exploration and Risk (1.0 – 2.0)

At higher temperatures, the model becomes increasingly adventurous. The probability distribution flattens, allowing unlikely tokens to compete with common ones.

What this feels like:

- Unexpected word choices

- Creative metaphors and associations

- Occasionally incoherent or factually incorrect output

At very high values, the model can experience what might be called syntactic drift. It may lose track of grammar, forget to close parentheses, shift topics mid-sentence, or invent facts that sound plausible but are entirely false.

Despite these risks, high temperature has real value. It is particularly useful for brainstorming, poetry, storytelling, and idea generation—any task where novelty matters more than reliability.

Advanced Temperature Strategies

Experienced users rarely rely on a single fixed temperature. Instead, they adapt temperature dynamically to suit different stages of a task.

Separate Creativity from Judgment

One effective approach is to divide work into two phases:

- Generation, using a higher temperature to encourage diverse ideas.

- Evaluation, using a low temperature to assess logic, correctness, or feasibility.

For example, you might ask the model to propose several solutions to a problem at a temperature of 0.9, then feed those solutions back and ask it to select the most robust one at a temperature of 0.0. This allows you to benefit from creativity without sacrificing rigor.

Escaping Deterministic Loops

Interestingly, very low temperatures can sometimes reduce quality. When a model always chooses the top token, it can get stuck repeating a phrase because that repetition is statistically “safe.”

This is why you sometimes see outputs like:

“This system is efficient because the system is efficient…”

A small increase in temperature—sometimes as little as 0.3 or 0.4—can introduce enough variation to break the loop and restore coherence.

Context-Aware Temperature in AI Systems

In complex workflows, especially those involving autonomous agents or tool use, temperature should be context-dependent.

Examples:

- Tool calls, function arguments, or API usage benefit from a temperature of 0.0 to avoid malformed syntax.

- Summarization works best at low but non-zero temperatures to preserve accuracy while allowing concise phrasing.

- Ideation and planning stages benefit from higher temperatures to explore alternative approaches.

Treating temperature as a dynamic control rather than a static setting leads to more robust systems.

The Fundamental Trade-Off

Temperature always represents a trade-off between reliability and diversity.

Higher temperature:

- Produces more varied and creative output

- Increases the risk of hallucinations and fabricated details

Lower temperature:

- Improves factual grounding and consistency

- Increases repetition and stylistic monotony

There is no universally “correct” temperature. The right choice depends entirely on the task and your tolerance for error versus novelty.

Conclusion

Temperature does not make a language model smarter or less informed. It changes how decisively the model acts on what it already knows.

Lowering the temperature turns the model into a careful executor of facts and rules. Raising it turns the model into an explorer—sometimes insightful, sometimes unreliable, often surprising.

Mastering temperature is ultimately about intention. When you understand what you want from the model—precision, creativity, or something in between—you can tune this single parameter to get results that feel deliberate rather than accidental.

Inspire Others – Share Now

Agentic AI Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own AI Agents

EV

Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own vehicle

Agentic AI LeadCamp

From AI User to AI Agent Builder — Capabl empowers non-coding professionals to ride the AI wave in just 4 days.

Agentic AI MasterCamp

A complete deployment ready program for Developers, Freelancers & Product Managers to be Agentic AI professionals

Table of Contents

- How Language Models Choose Words

- What Temperature Really Means

- The Temperature Spectrum in Practice

- Low Temperature: Precision and Control (0.0 – 0.3)

- Medium Temperature: Natural and Balanced (~0.6 – 0.8)

- High Temperature: Exploration and Risk (1.0 – 2.0)

- Advanced Temperature Strategies

- Separate Creativity from Judgment

- Escaping Deterministic Loops

- Context-Aware Temperature in AI Systems

- The Fundamental Trade-Off

- Conclusion